Overview:

Threat detection is a huge and evolving field. Threat detection can come in form of cyber protection mechanism, or software in perceived important places. What of personal safety? How does one detect danger to themselves?

Goal:

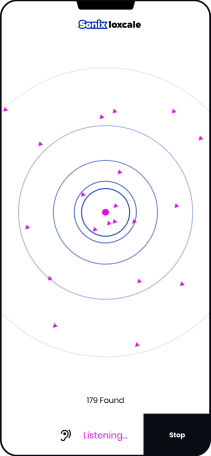

Sonix-Locale is the proposed product which is a threat detection mechanism for mobile phones that can detect danger using energy emissions from weapons.

Data is collected using heat wave detection software and labelled in accordance to weapon and fed to the model.

Business Case:

This is important as it not only improves personal safety of users, it also reduces money spent by the government in threat detection.

This project is proposed to generate revenue for our team in the following ways:

Private company partnership like the telecommunication companies wanting this feature incorporated into their sim cards and phones while software companies will try to out compete each other in funding to make a better detection model.

The more consumers use this feature, the more traction the company gains through traffic.

The government would also be interested in funding the project as it reduces the expenditure and a faster and convenient way to detect threat early before it happens.

Application of AI/ML

My proposed project will use ML/AI to detect and differentiate heat or energy from the human body and various weapons, to enable threat detection. The models will utilize neural networks to automatically:

Detect type of weapon in the vicinity by assailants

Differentiate human heat emission from weapon heat emission for accurate recognition.

Detect human emotions such as aggressiveness.

The outcome to be achieved is improved security in neighborhood and early alert to potential threats to the security agents.

Success Metrics:

Success metrics will be tested not only from the performance of the model from real world data, but also from the performance on MVP testing.

By the decrease in customer acquisition cost over time as our target market will be properly defined and email segmentation.

By the traffic to the website through SEO improvement and faster load time.

We will also measure through our baseline performance traffic which is 10k users within 3 months post-launch.

Data Acquisition:

The dataset will be sourced from LWIR segment of an electromagnetic camera and military weapons detection which will be compared and isolated to get a comprehensive dataset of heat emission from varying hand weapons and the human body.

It would cost a lot to do this and since there would be military detection dataset, there would need to be cleared to undertake such task.

There won’t be data sensitivity issues as what is being extracted is thermal or heat energy.

A large amount of data will be needed to start the process with continuous refreshment as more data is sourced and added.

Data Source:

The bias will be from labelling, comparison, and isolation of dataset. This data will be improved by engaging with domain experts from the inception of the project to minimize anomalies to the barest minimum.

Data Labels:

The labels I would be working with is dependent on the range of weapons chosen and the emotions to be considered.

The MVP will contain labels for:

Guns

Hand grenades

Knives

Emotions like anger, sadness, depression.

The strength of the labelling scheme is that data collection would be well defined and annotators having a clear picture of what to expect.

The downside to this scheme is that different guns give off different heat signal and may cause bias in model performance.

Model Building:

The model will be built using the in-house team as the team is comprised of domain experts. The storage will be outsourced as large amount of data will be used.

Evaluating Results:

Metrics to be used include the ROC AUC curve, precision, and recall. The performance level required is at least at 85% as this is a proposed security apparatus and needs as little false positives and false negative as possible.

Design:

Use Cases:

I’m designing for night walkers who enjoy a bit of the outdoors.

Also, for anyone who feels unsafe walking around in a dangerous neighborhood especially women.

Major case: A young lady leaves work very late and takes a train. On her way home, she feels uneasy as though she’s being followed. The product detects unusual energy such as aggressive emotions or a weapon close by, it alerts the authority first and then signals the lady using the secret tone setup by the user.

This product can be accessed by being integrated in the phone, by phone companies and sim cards by the telecommunication companies which means the product is in-built.

Roll Out:

Like I mentioned earlier, it would be integrated into phones and sim cards with software update incorporating it into existing user’s phones.

The roll-out will include the following milestones:

- Hire team comprising of:

Product owner, product designer, data scientists, ML engineers, QA, weapons specialists and 2 software engineers. This team is expected to be assembled in two months.

- Generate labeled dataset:

We will need to hire an external data annotation company to label the dataset with domain experts vetting this labelling process. We expect this to be done in a month.

- Build and train model:

We expect model building and training to take about 2-3 weeks for a first iteration.

- Test model, and iterate until precision meets specification:

We expect the testing/iteration phase to last around 2 weeks before launching the MVP.

- Launch:

Invariably, we think that we can get to launch in about 6-8 months.

- Iteratively add data:

We plan to inject a new set of data into the model and re-train on approximately a monthly cadence, and we’ll need the data scientist and ML engineer to set aside about a day each time this needs to be done.

Design For Longevity:

As detections are being confirmed, it acts as the feedback to the model. With time more labels would be added, the model retrained on these updated labels and tested to ensure efficiency.

There won’t be difference between training data and real world as the data used for training are real world data curated by the team.

After new model addition, 30% would be used on the updated model and 70% on the current model and performance compared.

Monitor Bias:

User feedback will mostly be used to mitigate unwanted bias.

Conclusion:

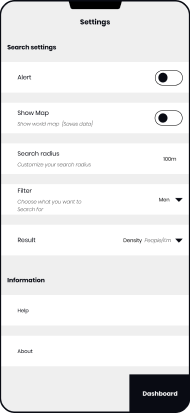

This project is my capstone project from Udacity for AI product Manager and the images are from my frontend development implementation as a HNG intern in 2020. You can view the implementation here. The report can be found here and you can connect with me on LinkedIn .