BERT is a Bidirectional Encoder Representations from Transformers which is designed to pre-train deep bidirectional representations from unlabeled text.

This article is on how to use BERT for sentiment analysis.

After I imported the libraries and loaded the dataset from the file, I started cleaning the data. This involves removing symbols that may interfere during tokenization.

Next I tokenized the data, which in order for me to do, I had to create a BERT layer using the Keras layer from the hub .

Then encoded the sentences for cleaning.

Next, I created padded batches, this way I added the minimum of padding tokens possible. For that, I sorted sentences by length, applied padded_batches and then shuffled.

Model Building:

I created a deep convolutional neural network DCNN with the following parameters: vocab_size, emb_dim=128, nb_filters=50, FFN_units=512, nb_classes=2, dropout_rate=0.1, training=False and name="dcnn".

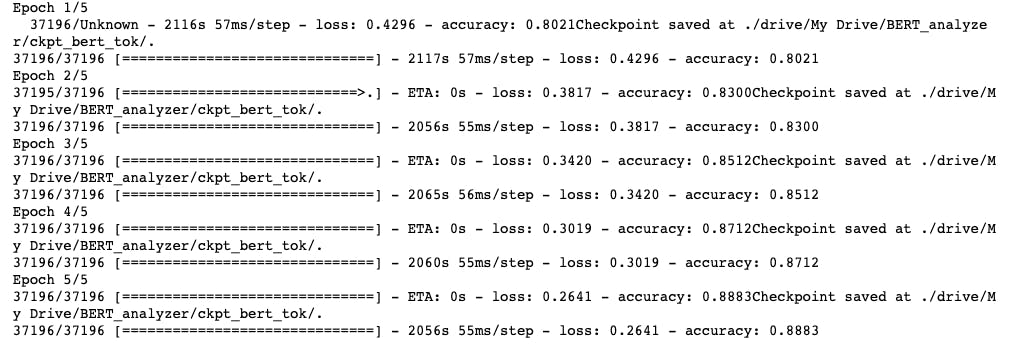

Training:

I trained the model using an else-if statement. If the NB_CLASSES equals 2, loss fucntion will be binary cross-entropy, adam optimizer and metrics equals 'accuracy'. Else, the loss function will be sparse categorical cross-entropy, adam optimizer and sparse metrics accuracy. All with 5 epochs.

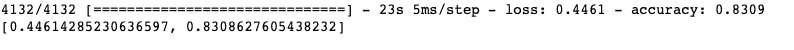

Model Evaluation:

I evaluated the trained model and got;

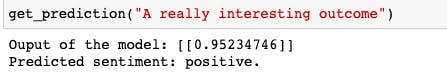

Prediction:

I tested the model's prediction accuracy by entering a sentence and it returned a 95% accuracy with the right analysis.

Conclusion:

This was my project from a Udemy certification course last year and it was my first time of coming across BERT.

The repo of this project can be found here. My LinkedIn profile for comments.